OpenAI Report Identifies Malicious Use of AI in Cloud-Primarily based Cyber Threats

A report from OpenAI identifies the misuse of synthetic intelligence in cybercrime, social engineering, and affect operations, notably these focusing on or working by means of cloud infrastructure. In “Disrupting Malicious Uses of AI: June 2025,” the corporate outlines how menace actors are weaponizing giant language fashions for malicious ends — and the way OpenAI is pushing again.

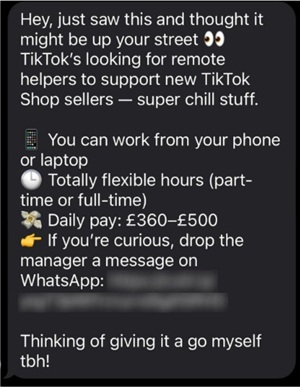

The report highlights a rising reliance on AI by adversaries to scale scams, automate phishing and deploy tailor-made misinformation throughout platforms like Telegram, TikTok and Fb. OpenAI says it’s countering these threats utilizing its personal AI methods alongside human analysts, whereas coordinating with cloud suppliers and world safety companions to take motion towards offenders.

Within the three months since its earlier replace, the corporate says it has detected and disrupted exercise together with:

- Cyber operations focusing on cloud-based infrastructure and software program.

- Social engineering and scams scaling by means of AI-assisted content material creation.

- Affect operations trying to govern public discourse utilizing AI-generated posts on platforms like X, TikTok, Telegram and Fb.

The report particulars 10 case research the place OpenAI banned consumer accounts and shared findings with business companions and authorities to strengthen collective defenses.

Here is how the corporate detailed the techniques, methods, and procedures (TTPs) as offered within the dialogue of 1 consultant case — a North Korea-linked job rip-off operation utilizing ChatGPT to generate pretend résumés and spoof interviews:

| Exercise | LLM ATT&CK Framework Class |

|---|---|

| Automating to systematically fabricate detailed résumés aligned to numerous tech job descriptions, personas, and business norms. Menace actors automated technology of constant work histories, instructional backgrounds, and references by way of looping scripts. | LLM Supported Social Engineering |

| Menace actors utilized the mannequin to reply employment-related, seemingly software questions, coding assignments, and real-time interview questions, primarily based on specific uploaded resumes. | LLM Supported Social Engineering |

| Menace actors sought steerage for remotely configuring corporate-issued laptops to look as if domestically situated, together with recommendation on geolocation masking and endpoint safety evasion strategies. | LLM-Enhanced Anomaly Detection Evasion |

| LLM assisted coding of instruments to maneuver the mouse robotically, or preserve a pc awake remotely, probably to help in distant working infrastructure setups. | LLM Aided Growth |

Past the employment rip-off case, OpenAI’s report outlines a number of campaigns involving menace actors abusing AI in cloud-centric and infrastructure-based assaults.

Cloud-Centric Menace Exercise

Most of the campaigns OpenAI disrupted both focused cloud environments or used cloud-based platforms to scale their affect:

- A Russian-speaking group (Operation ScopeCreep) used ChatGPT to help within the iterative growth of refined Home windows malware, distributed by way of a trojanized gaming device. The marketing campaign leveraged cloud-based GitHub repositories for malware distribution and used Telegram-based C2 channels.

- Chinese language-linked teams (KEYHOLE PANDA and VIXEN PANDA) used ChatGPT to assist AI-driven penetration testing, credential harvesting, community reconnaissance, and automation of social media affect. Their targets included US federal protection business networks and authorities communications methods.

- An operation dubbed Uncle Spam, additionally linked to China, generated polarizing US political content material utilizing AI and pushed it by way of social media profiles on X and Bluesky.

- Mistaken Quantity, seemingly primarily based in Cambodia, used AI-generated multilingual content material to run activity scams by way of SMS, WhatsApp, and Telegram, luring victims into cloud-based crypto cost schemes.

Defensive AI in Motion

OpenAI says it’s utilizing AI as a “drive multiplier” for its investigative groups, enabling it to detect abusive exercise at scale. The report additionally highlights how utilizing AI fashions can paradoxically expose malicious actors by offering visibility into their workflows.

“AI investigations are an evolving self-discipline,” the report notes. “Each operation we disrupt offers us a greater understanding of how menace actors try to abuse our fashions, and permits us to refine our defenses.”

The corporate requires continued collaboration throughout the business to strengthen defenses, noting that AI is just one a part of the broader web safety ecosystem.

For cloud architects, platform engineers and safety professionals, the report is a helpful learn. It illustrates not solely how attackers are utilizing AI to hurry up conventional techniques, but in addition how cloud-based providers are central each to their targets and to the infrastructure of recent menace campaigns.

The complete report is out there on the OpenAI site here.